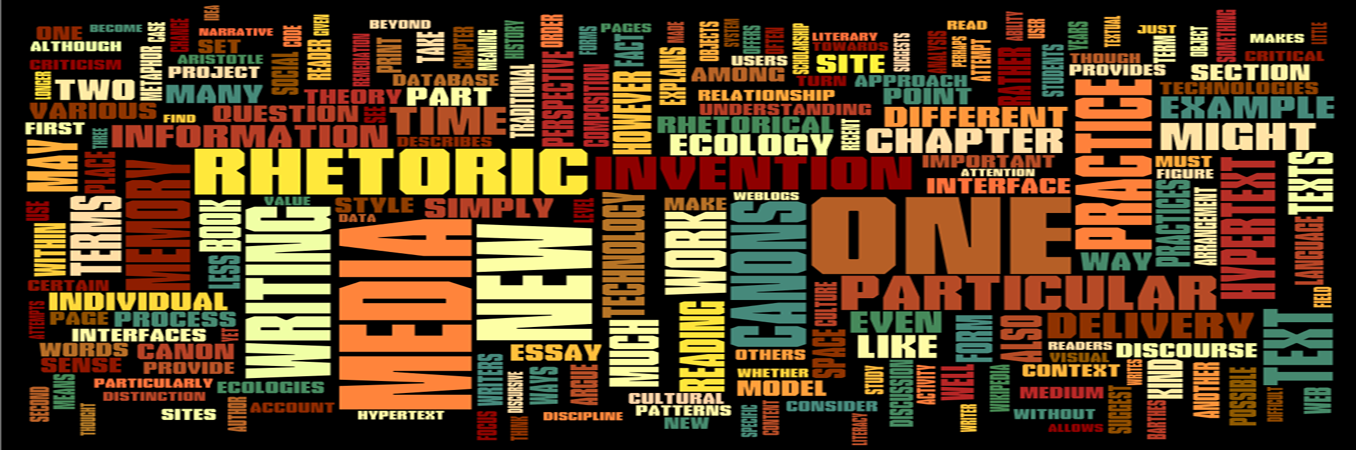

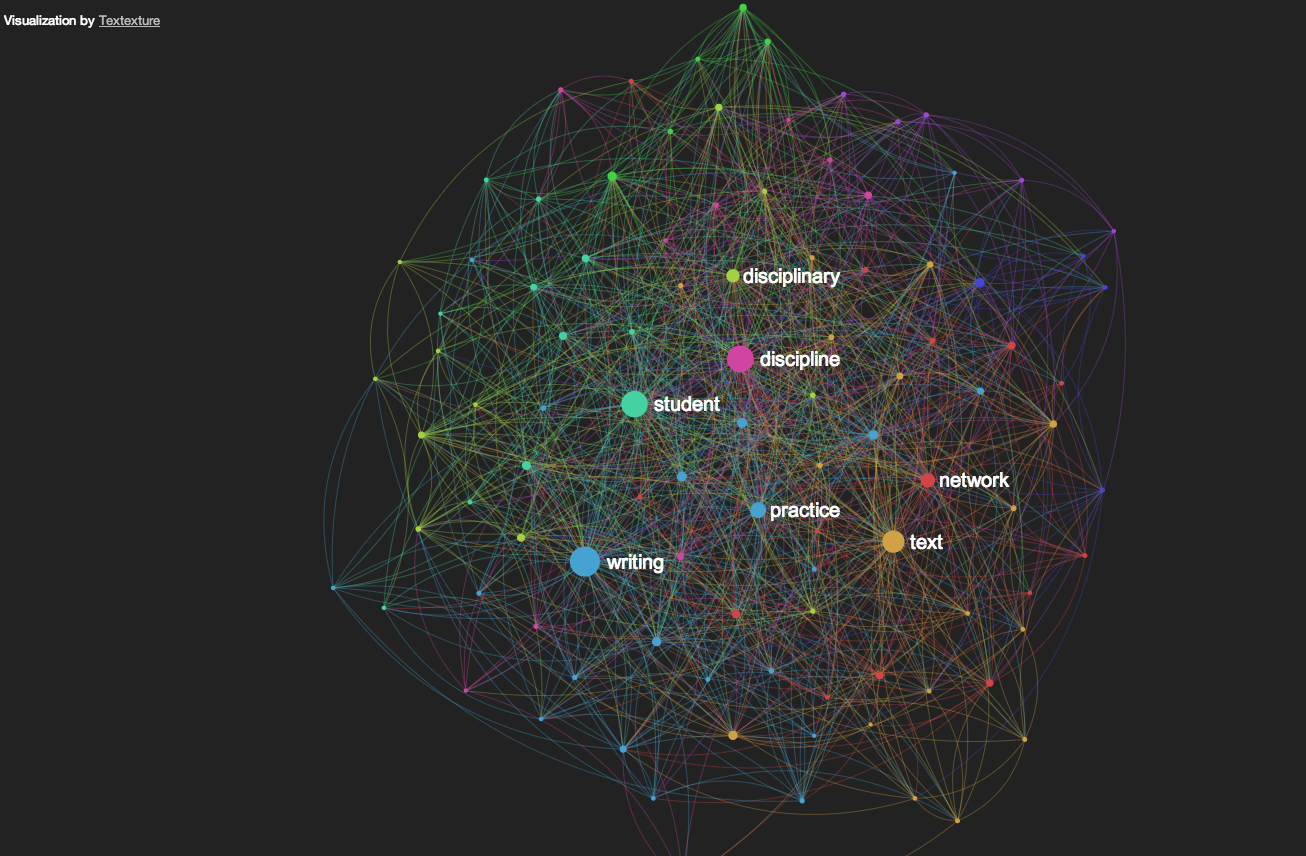

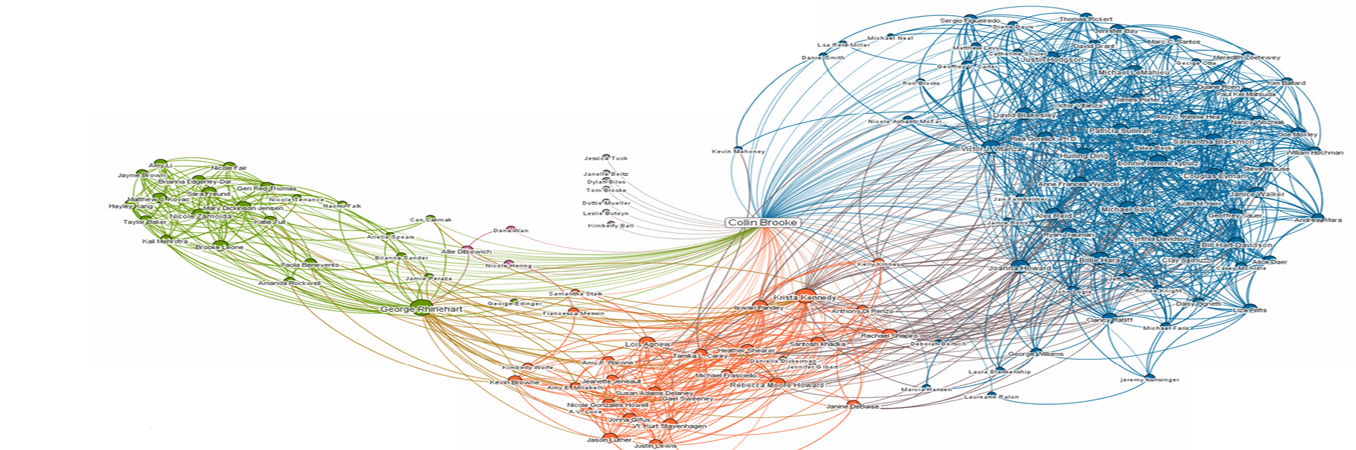

Hi there. I’m Collin Brooke, and I’m an Associate Professor of Rhetoric and Writing at Syracuse University, where I’ve been since the fall of 2001. I’m the author of Lingua Fracta: Towards a Rhetoric of New Media (2009), and any number of various articles and chapters, some of which you’ll find on this site under Publications.

I’ve been blogging with more or less frequency since about 2003, and this is the second iteration of my weblog. With the emergence of social media, I tend to split my time between this site and services like Facebook and Twitter, so while I’m online pretty constantly, this space is for the longform writing that I do. I write mostly here about academic issues, although you’ll find an occasional pop culture post as well. The relationship between rhetoric and technology has been an enduring focus of my work since graduate school; it tends to inform my writing in this space as well.

I’m in the middle of shifting this site over to a new structure/theme, and so if pieces of it don’t quite make sense, that’s almost certainly the reason.